原文链接http://blog.leapmotion.com/image-api-now-available-v2-tracking-beta/

以前可以通过opencv读取leap的数据(这样无法使用他们提供的追踪算法了),而现在官方提供原始数据了(和kinect v1 sdk时的进度如出一辙)。做算法研究的人肯定是很需要这个上视频数据,而其他人可能不太需要,转在这里给需要的人~

You asked for it, you got it! By popular demand, we’ve just released the Image API, which lets you access raw data from the Leap Motion Controller for the first time. Applications built with version 2.1.0 of our SDK will be able to access our hardware like a webcam. Using this data, you can add video passthrough into your applications, which opens up a wide range of possibilities for developers experimenting with computer vision, 3D scanning, marker tracking, and object recognition.

What it can see

The data takes the form of a grayscale stereo image of the near-infrared light spectrum, separated into the left and right cameras. Typically, the only objects you’ll see are those directly illuminated by the Leap Motion Controller’s LEDs. However, incandescent light bulbs, halogens, and daylight will also light up the scene in infrared. You might also notice that certain things, like cotton shirts, can appear white even though they are dark in the visible spectrum.

How it works

When requested by a client application, the Leap Motion Service sends raw images as a part of each Frame object, alongside the existing hands, tools, and gesture data. (This is possible thanks in part to our Autowiring library.)

When the Image API is enabled through its policy flag, the Leap::Frame object provides a list of images containing one Image object from each of the two cameras. The main function of the Image class, data(), exposes a pointer to the buffer of 8-bit brightness values in the image. The height and width of the image represented by this buffer can be queried using height() and width() respectively.

Lens distortion compensation

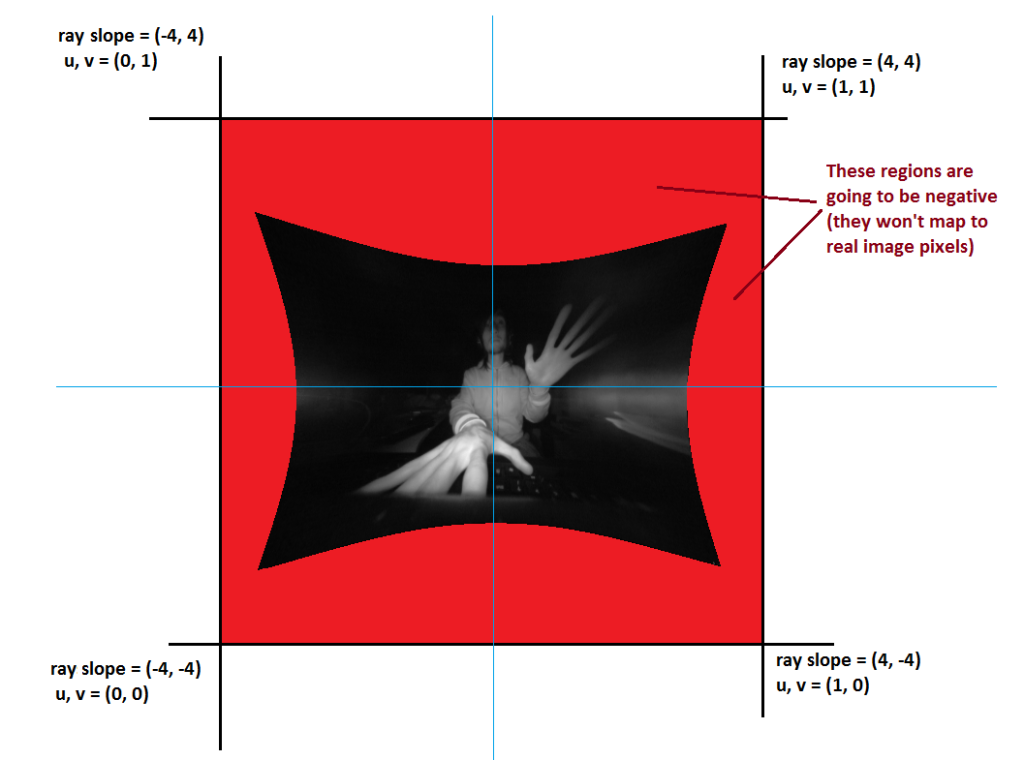

We’ve also provided functions to correct for the camera lens distortion, allowing each pixel in the image to map to the exact direction that a ray of light came from. This means that the image will appear undistorted.

These factors are rayOffsetX, rayOffsetY, rayScaleX, and rayScaleY. Since the distortion data is intended to be used as a texture, and since that texture is indexed from 0 to 1, we want to convert ray queries (which exist from -4 to 4) to the 0 to 1 range. This is achieved by texDist = ray*rayscale + rayoffset, whererayscale = 1/8 and rayoffset = 0.5. (In fact, these are the very values returned by the API.)

If you perform a floating-point image lookup (using the OpenGL texture type GL_RG and interpreting red as X and green as Y), then you’ll get another texture coordinate. This is then the texture you use to look into the raw image. That gives you the sought-after brightness, which can then be bilinearly interpolated.

Protecting user privacy

We’re aware of the privacy implications of having access to raw image data, and we take these issues very seriously. Currently, the Leap Motion Service will not send raw images to client applications unless the user explicitly opts into the feature via the Leap Motion Control Panel.

This feature has been in the works for a while, and as we continue to build it out, we’d love to get your feedback and hear your ideas for potential applications and features.

Further Reading: Check out the API documentation and Camera Images article on the dev portal for a more in-depth look at the Image API.

李逍遥说说

李逍遥说说